XAI Assistant

Target group

Expected result

General conditions

Operating instructions

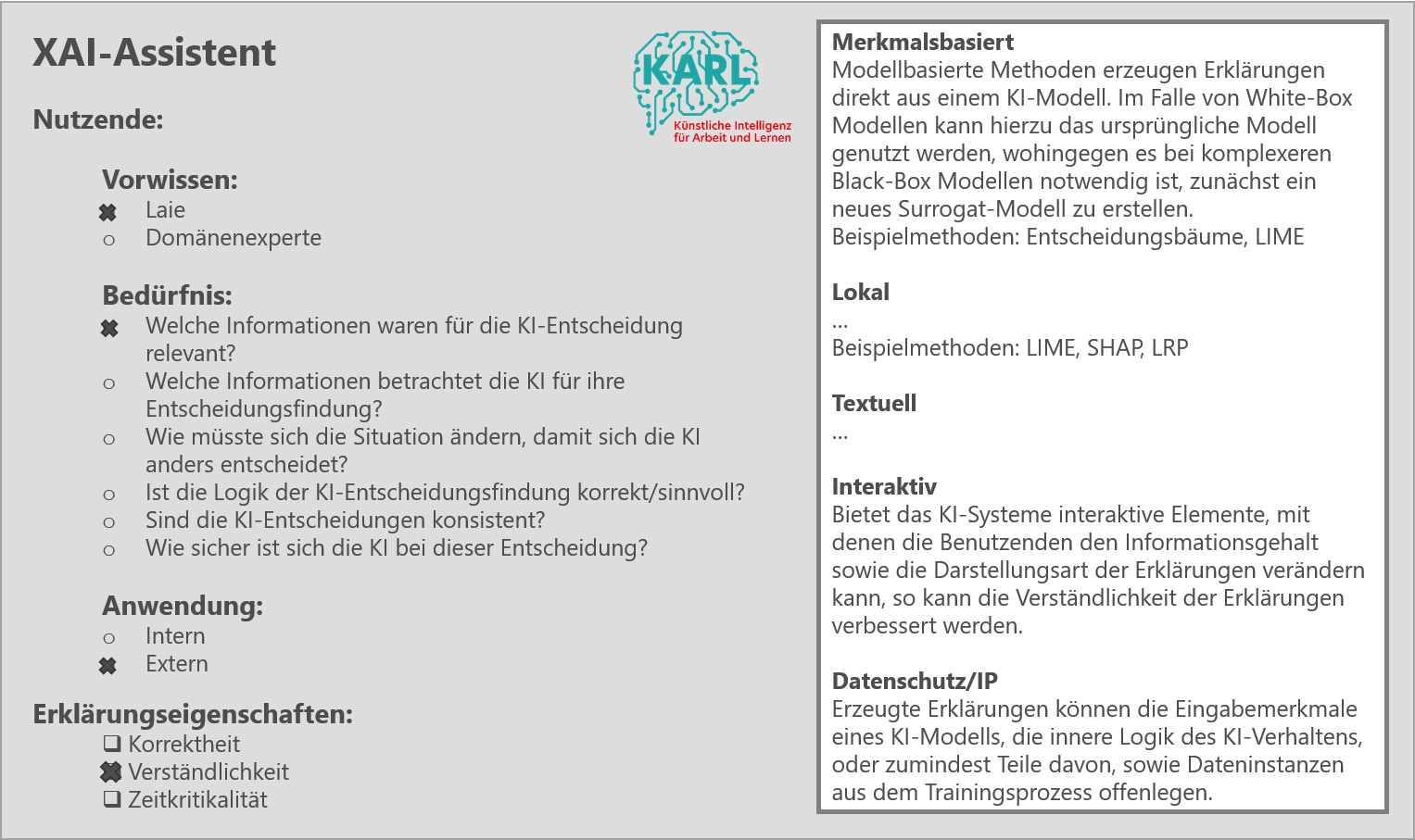

The questionnaire is structured as follows:

1. Users:

This section asks for information about the end users under consideration.

- Prior knowledge:

Either domain experts or laypersons are considered. Domain experts have expert knowledge in the domain under consideration, but not necessarily AI expertise. An example of this would be an insurance agent. Laypersons have neither AI expertise nor domain knowledge. An example of this would be a person to be insured.

- Need:

A collection of questions from which a question can be selected to be answered by an explanation of the AI decision. This represents the end user's need for an explanation.

- Application:

Additional information about the application context. Internal refers to an application within the company that uses the AI system. External refers to a private end user. This is usually a customer of the company using AI.

2. Explanatory properties:

Three typical properties of explanations can be selected if they are particularly relevant for the specific XAI use case: the correctness of the XAI method used, the comprehensibility of the explanations provided and the time-criticality of the calculation and provision of the explanations.

After completing the questionnaire, the relevant information is collected and displayed in an output field.

Contact person

robin.weitemeyer@h-ka.de

Dr. Jutta Hild | Fraunhofer IOSB

jutta.hild@iosb.fraunhofer.de

Maximilian Becker | Fraunhofer IOSB

maximilian.becker@iosb.fraunhofer.de

Format

To the offer

XAI Assistant

Target group

Expected result

General conditions

Operating instructions

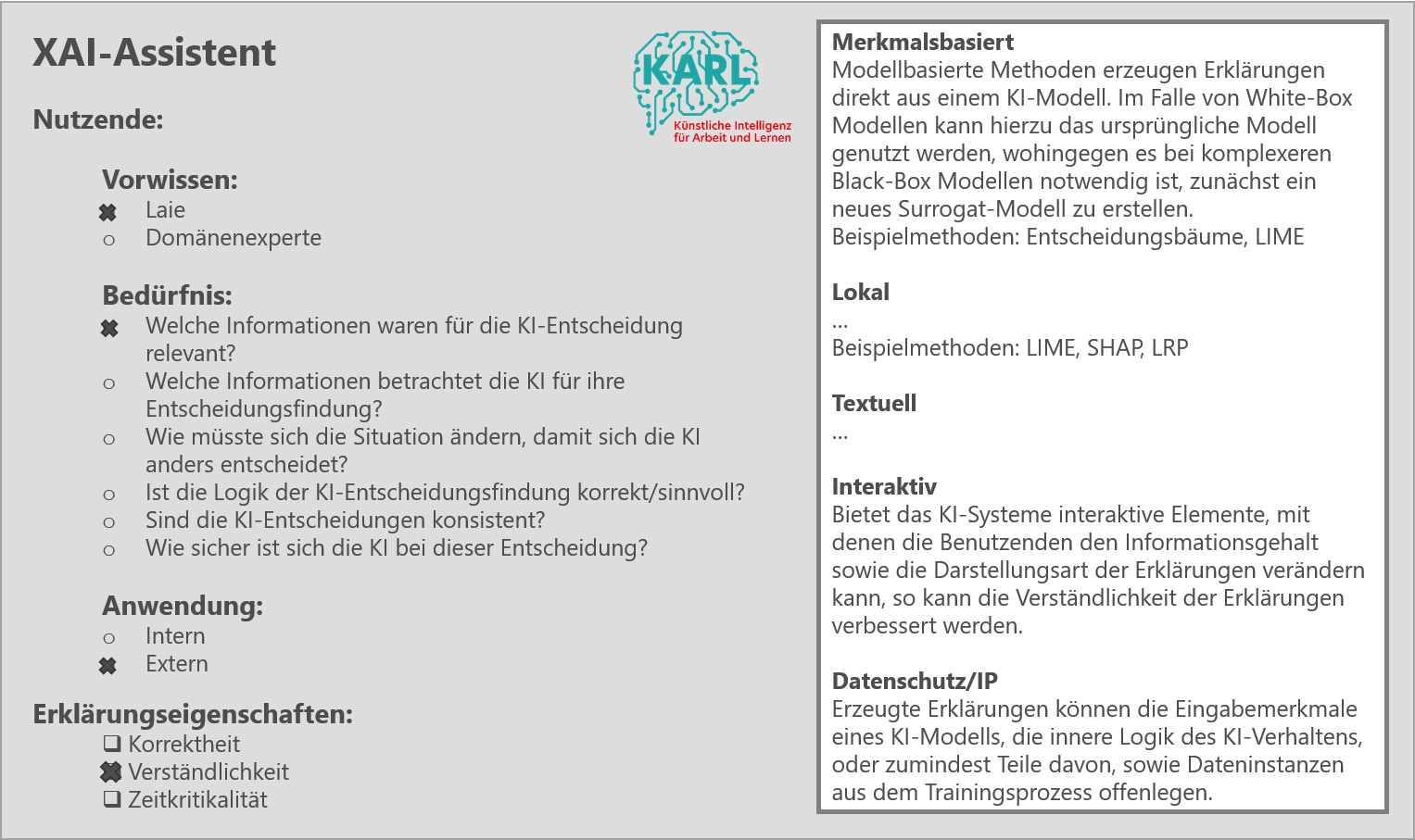

The questionnaire is structured as follows:

1. Users:

This section asks for information about the end users under consideration.

- Prior knowledge:

Either domain experts or laypersons are considered. Domain experts have expert knowledge in the domain under consideration, but not necessarily AI expertise. An example of this would be an insurance agent. Laypersons have neither AI expertise nor domain knowledge. An example of this would be a person to be insured.

- Need:

A collection of questions from which a question can be selected to be answered by an explanation of the AI decision. This represents the end user's need for an explanation.

- Application:

Additional information about the application context. Internal refers to an application within the company that uses the AI system. External refers to a private end user. This is usually a customer of the company using AI.

2. Explanatory properties:

Three typical properties of explanations can be selected if they are particularly relevant for the specific XAI use case: the correctness of the XAI method used, the comprehensibility of the explanations provided and the time-criticality of the calculation and provision of the explanations.

After completing the questionnaire, the relevant information is collected and displayed in an output field.

Contact person

robin.weitemeyer@h-ka.de

Dr. Jutta Hild | Fraunhofer IOSB

jutta.hild@iosb.fraunhofer.de

Maximilian Becker | Fraunhofer IOSB

maximilian.becker@iosb.fraunhofer.de

Format

To the offer